NLP Advances

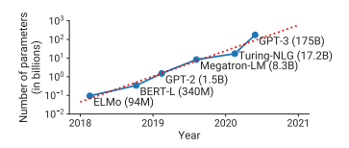

- NLP, NLU, NLG has progressed by leaps and bounds from the days of rule based pattern matching to static embeddings Mikolov 2013 to RNN using LSTM/GRU, allowing us to capture semantic meaning and text sequence relationships to modern day transformers leveraging attention mechanism Vaswani 2017 and pretraining using public large corpuses. Network of high performance GPUs enables us to train and inference very large language models Brown 2020, upwards of 100B parameters and with next generation of trillion parameters models on the horizon.

NLU

- Natural language is the spoken and written texts that pervade in our daily interaction whether we are reading a news article, listening to daily news, deciphering a financial report, email updates. Texts is everywhere: tweets, emails, image captions. A passage is not simply a collection of words, it carries contextual meanings and very often demand common sense understanding of unspoken, in data science terms we call them prior.

- There are many challenges when it comes to teach a machine learning algorithm how to understand text, draw essential meaning and key intents of messages. The complexity comes for a number of reasons. Words have multiple meanings, often times they mean different things in different contexts. Lexically there are different ways to spell words, sometimes human make mistakes in spelling, the list goes on.

- To break down the different nuiances of mimicking human ability to understand texts, there are broken down tasks affiliated with natural language processing and natural language understanding. Many benchmarks with datasets serve to test and measure machine (language model)'s ability to correctly distinguish meanings behind passages. One such benchmark is GLUE, I have done some work in parameter tuning to run the various benchmarks here

Key NLU tasks and benchmarks

- An important area of research is measuring the quality and ability of understanding trained neural models. One such task is NLI - natural language inference that involves determination of whether a premise entails a hypothesis. The HANS paper describes construction of cases to test if an NLI model performs well by learning shallow heuristics: lexical overlap, subsequence and constituent.

- Question Answering

- Machine Reading Comprehension

- cloze - take a part of a sentence as the answer, the rest of the sentence as question

- multi-choice

- span extraction

- free-form - answer does not necessary match that may not match words in context